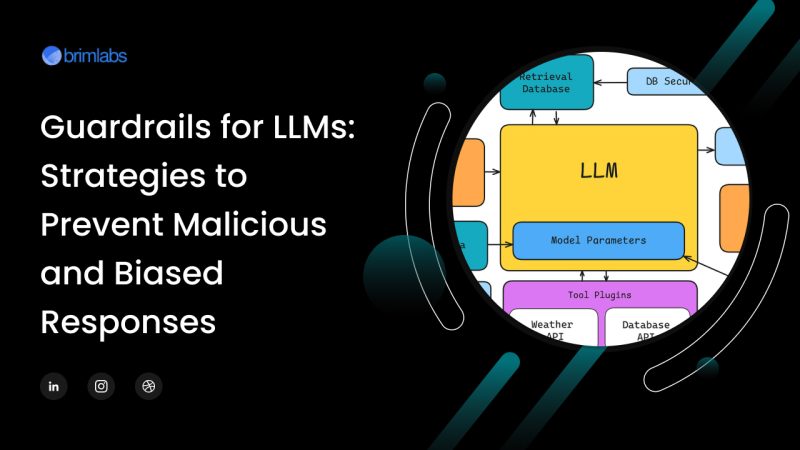

LLMs have become the cornerstone of modern AI applications, from powering intelligent chatbots to enabling advanced content generation, summarization, and customer support systems. However, their very strength, the ability to generate human-like text, also introduces serious risks. LLMs can inadvertently generate malicious, biased, or misleading content, leading to reputational damage, ethical concerns, and even legal liabilities for businesses.

To ensure the safe and responsible deployment of LLMs, it’s essential to build robust guardrails and technical and strategic measures that prevent harmful outputs while preserving the model’s usefulness. In this blog, we’ll explore the key strategies for mitigating these risks and setting up guardrails that ensure LLMs act responsibly in real-world applications.

Why do Guardrails Matter?

Without proper oversight, LLMs can:

- Spread misinformation or harmful stereotypes.

- Produce toxic or offensive language.

- Be manipulated to output malicious content (prompt injection attacks).

- Amplify existing societal biases hidden in training data.

- Generate responses that violate privacy, ethics, or organizational policy.

The potential consequences? Loss of trust, legal repercussions, brand damage, and missed opportunities in regulated industries like finance, healthcare, and education.

Fine-tuning with Curated Datasets

Custom fine-tuning allows developers to adapt LLMs to specific domains while removing harmful behavior patterns. By training the model on ethically reviewed, high-quality datasets, you can reduce the likelihood of it producing biased or unsafe content.

Reinforcement Learning from Human Feedback

RLHF is a powerful technique where human reviewers rank model outputs, and the model learns from these preferences. It helps LLMs align more closely with human values, social norms, and business ethics.

Rule-Based Content Filters

Before or after model generation, implement rule-based filters to screen for problematic content. These can include:

- Keyword-based filters for profanity, hate speech, or PII.

- Regex patterns for phone numbers, addresses, or email leakage.

- Topic blockers for sensitive or restricted domains.

Prompt Engineering and Template Design

Designing safer prompts is a front-line defense against unsafe outputs. A thoughtful prompt structure can guide the LLM away from risky territory.

- Use instructional phrasing that encourages neutrality and factuality.

- Avoid vague or open-ended inputs that could lead to hallucinations.

- Design fallback templates that redirect unsafe or out-of-scope queries.

Moderation APIs and Human-in-the-Loop Systems

Integrate automated moderation tools like OpenAI’s moderation endpoint or Google’s Perspective API to catch flagged content in real time. For high-risk domains, involve human reviewers as the final check for sensitive interactions.

Differential Privacy and Data Anonymization

LLMs can unintentionally memorize and regurgitate sensitive training data. Techniques like differential privacy help prevent this by introducing noise to training inputs, ensuring the model doesn’t leak real user data.

Model Auditing and Red Teaming

Regularly audit your model with red-teaming exercises, where experts try to “break” the model by prompting it into generating biased or harmful content. This proactive approach reveals:

- Edge-case failures.

- Potential jailbreak techniques.

- Hidden biases or systemic risks.

Custom Guardrails for Enterprise Applications

Enterprises may require domain-specific safety protocols, especially when dealing with regulated industries. Tailor your guardrails to:

- Comply with GDPR, HIPAA, or industry-specific guidelines.

- Match the brand tone and compliance policies.

- Respect cultural norms and global sensitivity.

The Road Ahead: Striking the Right Balance

No guardrail system is perfect, and the key is to adopt a layered defense strategy. Each safety layer, from prompt design to moderation APIs adds resilience. But building these guardrails requires deep expertise in AI, domain knowledge, and an ethical lens.

How Brim Labs Can Help?

At Brim Labs, we specialize in developing safe, scalable, and ethically responsible AI solutions. From designing custom LLM pipelines to implementing privacy-aware moderation layers, our team helps businesses across healthcare, fintech, and SaaS industries integrate trustworthy AI into their products.

If you’re building with LLMs and want to ensure your systems are aligned, secure, and bias-mitigated, let’s connect. Brim Labs is your trusted partner for AI-driven innovation with guardrails built in.