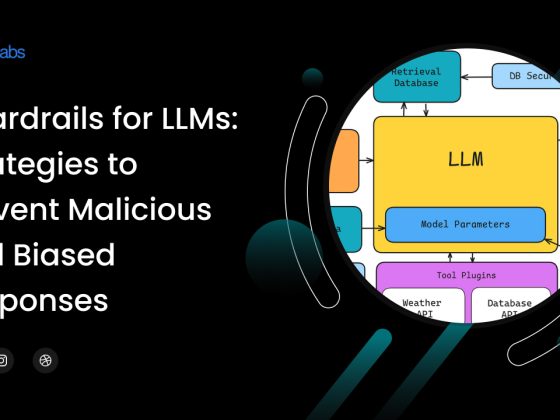

As large language models (LLMs) evolve, they are no longer limited to just text. Today’s LLMs are multi-modal—they can understand, generate, and reason across various inputs like text, images, audio, and even video. This new ability opens the door to powerful applications, such as smart virtual assistants, real-time translation systems, medical diagnostics, and creative design tools.

However, with greater power comes greater responsibility.

As these models grow more advanced, we must also strengthen the guardrails—the tools and methods we use to guide, monitor, and control their behavior. This becomes especially important when multiple data types are involved. In this blog, we’ll explore why multi-modal guardrails are necessary, the challenges they pose, and how organizations can use them to build safer AI systems.

Understanding Multi-Modal LLMs

A multi-modal LLM is trained to work with different types of inputs and outputs. Earlier models like GPT-3 focused mainly on text. But now, newer models such as GPT-4, Gemini, and Claude can handle text, images, and audio together.

Here are some real-world examples of how multi-modal models are used:

- Customer service bots that understand spoken questions and respond with visuals.

- Tools for the visually impaired that describe images using audio in real-time.

- Creative design assistants that create visuals based on both text and image inputs.

Because these models process different types of information together, their behavior is more complex and harder to predict or control.

Why Do We Need Guardrails for Multi-Modal AI?

1. Preventing Misuse

Multi-modal LLMs can unintentionally generate harmful content. For example, they might produce deepfakes, misleading images, or biased transcripts. Without the right protections, such tools could be used for spreading misinformation, committing fraud, or even harassment.

2. Ensuring Legal and Ethical Compliance

Each type of data is governed by its own laws and ethical rules. For instance:

- Text might need to follow content guidelines.

- Images could risk violating privacy regulations like GDPR.

- Audio may require the speaker’s permission.

Proper guardrails ensure that multi-modal systems meet legal and ethical standards across all formats.

3. Reducing Bias Across Modalities

Different inputs bring different types of bias. For example:

- A model may misinterpret certain accents in audio.

- It could link specific images with unfair stereotypes.

Guardrails help catch and correct these multi-modal biases, which may be hard to spot in text-only systems.

Components of Effective Multi-Modal Guardrails

To build safe multi-modal systems, developers must use a layered approach. This includes:

1. Input Preprocessing Filters

- Screen for harmful images or hate speech in text.

- Detect and remove unwanted noise or dangerous audio content.

- Use tools like OCR and image captioning to better understand visual inputs before the model uses them.

2. Real-Time Monitoring

- Watch model outputs to ensure they match the intended input.

- Detect hallucinated facts, offensive language, or misleading visuals as they happen.

3. Contextual Alignment

- Make sure outputs fit the user’s request, values, and context.

- For instance, a voice command like “images of doctors” should return a fair and diverse set of images.

4. Output Moderation Pipelines

- Combine language processing, image analysis, and audio checks to catch harmful outputs.

- Use techniques like Reinforcement Learning from Human Feedback (RLHF) across all modalities.

5. Audit Logs and Traceability

- Keep records of decisions made by the AI, especially in critical sectors like healthcare.

- Allow teams to explain how and why the model made certain choices.

Challenges in Building Multi-Modal Guardrails

1. Complex Interactions Between Modalities

Meaning can shift when moving from one format to another. A phrase might sound fine in text but be harmful when turned into an image or audio response.

2. Speed vs. Safety

Guardrails must work fast enough for real-time systems but also be thorough. Striking the right balance is difficult.

3. Diverse Training Data Needs

Training these models and their safeguards with inclusive and balanced data is both costly and time-consuming.

4. Poor Generalization Across Modalities

What works for text may not work for images or audio. Guardrails must understand deeper context—not just surface features.

What’s Next for Multi-Modal Guardrails?

As LLMs move toward general intelligence across all formats, future guardrails will likely include:

- Cross-Modal Explainability: Showing how the model understood and processed a prompt across different types of input.

- Customizable Safety Settings: Letting users or companies set their own moderation rules.

- Federated Guardrails: Running safety checks locally on devices, in addition to the cloud.

To build these tools responsibly, it’s important for AI developers, regulators, and researchers to work together.

Conclusion: Building Safer AI with Brim Labs

At Brim Labs, we believe the true power of AI isn’t just in what it can do—but in how safely and ethically it operates. Our team of AI/ML experts and full-stack developers specializes in building multi-modal systems that are secure, compliant, and aligned with user intent.

Whether you’re developing a voice assistant, a visual SaaS tool, or a healthcare AI product, we help you go to market faster, smarter, and safer—with built-in guardrails every step of the way.