For years, the AI conversation has been dominated by massive general-purpose language models like GPT-4, Claude, and Gemini. These models, built on hundreds of billions of parameters, are breathtakingly capable, but also prohibitively expensive, energy-intensive, and often overkill for most real-world business applications.

Enter a new trend that’s quietly reshaping the landscape: smaller, task-specific language models. Instead of trying to answer every question in the universe, these lean models are optimized for specific domains, workflows, or tasks, and they’re proving to be faster, cheaper, more secure, and in many cases, more accurate than their larger cousins.

In this blog, we’ll explore what’s driving this shift, how businesses are adopting task-specific LLMs, and real-world examples that show why small is the new big.

Why the Shift Toward Task-Specific LLMs?

1. Efficiency at Every Level

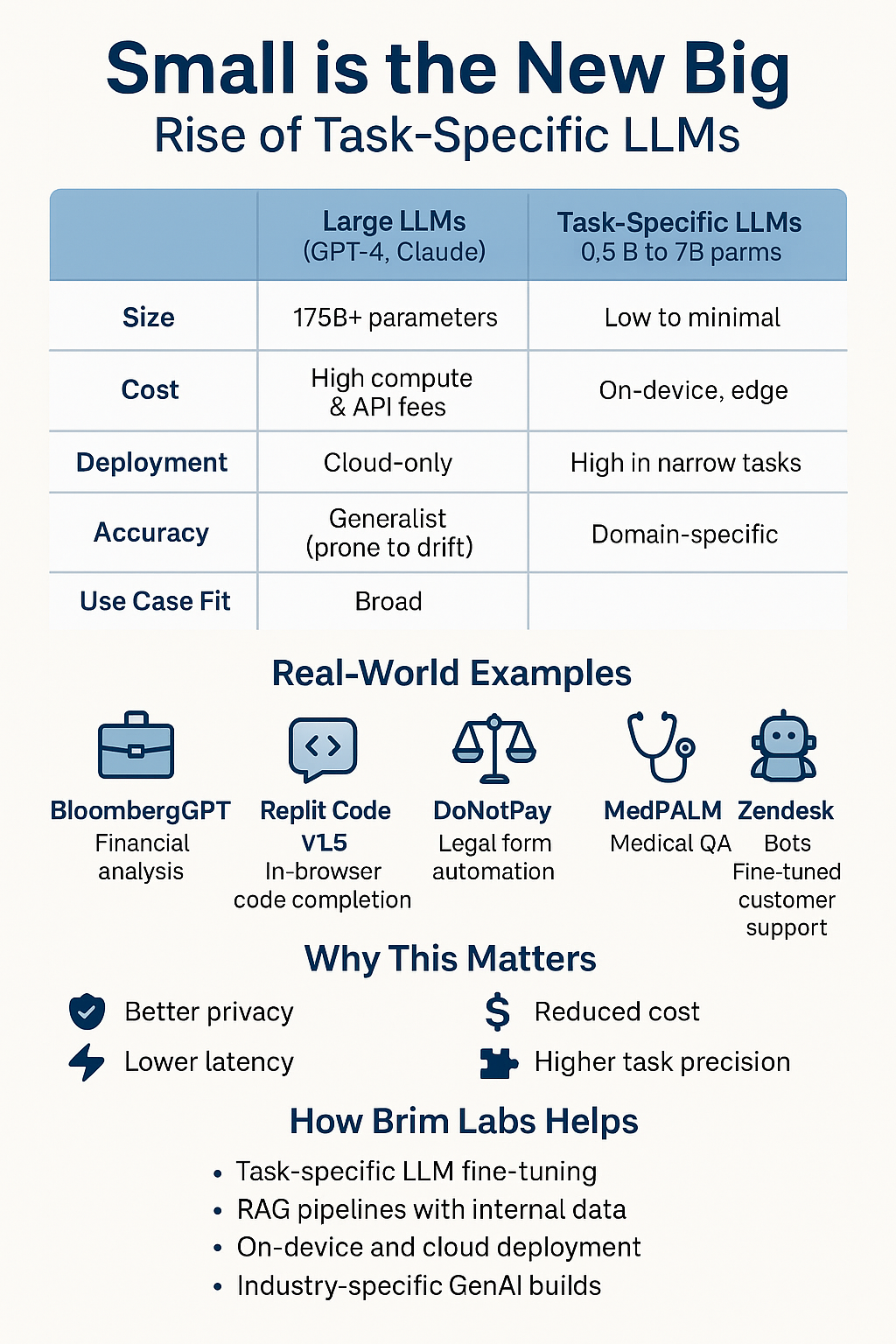

Large LLMs consume massive GPU resources. For example, a single query to GPT-4 can use up to 10 times the compute of a small, locally deployed model. Task-specific LLMs like Mistral-7B or TinyLlama-1.1B can run on laptops, edge devices, or even in-browser (via WebAssembly), significantly reducing inference costs and carbon footprint.

2. Customization with Less Data

With techniques like LoRA (Low-Rank Adaptation) and QLoRA, fine-tuning small models on just a few thousand task-specific examples can yield impressive results. This makes it feasible for startups and teams without millions of dollars to deploy purpose-built AI tools.

3. Security and Control

Deploying smaller models locally or on private cloud infrastructure ensures greater data privacy and compliance, a key requirement in regulated industries like healthcare and finance.

4. Speed Matters

In real-time environments like customer chatbots or factory monitoring systems, milliseconds count. Smaller models respond in real time without needing heavy server infrastructure.

Real-World Examples of Task-Specific LLMs

1. DoNotPay’s Legal Bot (Based on GPT-J)

DoNotPay, a consumer rights startup, developed a fine-tuned legal assistant model that helps users fight parking tickets, cancel subscriptions, and navigate small claims court. Instead of a general model, they used a smaller GPT-J variant trained on legal templates and case documents, making it precise, safe, and efficient.

Why it works: It’s trained only on legal domains and avoids the hallucination risks of larger LLMs trying to “guess” legal procedures.

2. Replit’s Code Completion Engine (Replit Code v1.5)

While GitHub Copilot uses Codex, Replit chose to train its own small, focused model on user-submitted codebases for languages like Python and JavaScript. The result is a code completion tool that runs inside the browser, giving developers a seamless, low-latency experience without relying on an external API.

Why it works: Fast, task-optimized, and tuned specifically for their platform and users.

3. MedPaLM (Google + Stanford)

MedPaLM is a fine-tuned version of PaLM for medical question answering. While the original PaLM is general-purpose, MedPaLM is trained only on medical datasets, clinical QA, research papers, patient notes, making it suitable for high-stakes medical reasoning.

Why it works: A small, specialized model ensures higher factuality and aligns with clinical best practices.

4. BloombergGPT

Bloomberg created BloombergGPT, a 50-billion parameter model (smaller than GPT-4) but entirely trained on financial documents, SEC filings, earnings call transcripts, and financial news. It powers in-house analytics tools for traders and analysts.

Why it works: Unlike general LLMs that fumble with financial jargon, BloombergGPT speaks the native language of Wall Street.

5. Salesforce’s CodeGen2 and XGen

Salesforce built XGen, a compact open-weight LLM trained on curated data for enterprise use. Similarly, CodeGen2 is tailored for enterprise-level code generation and Salesforce-specific workflows.

Why it works: These models are designed with enterprise governance, security, and CRM productivity in mind.

Industries Rapidly Adopting Task-Specific LLMs

- Healthcare: Medical summarizers and radiology report generators (e.g., by RadGPT or MedPalm) tailored for specific hospital workflows.

- E-commerce: AI product classifiers and copywriting assistants (e.g., Klarna’s shopping assistant) trained on retail catalogues.

- Customer Support: Zendesk and Intercom are integrating mini-LLMs trained only on customer ticket history and support docs.

- Manufacturing: Predictive maintenance bots trained on sensor data and historical machine logs.

- Legal & Compliance: Contract review assistants trained on NDAs, SLAs, and data protection clauses.

The Technology Behind the Trend

Modern toolkits and frameworks are making it easier than ever to build and deploy smaller models:

- OpenLLM, LangChain, and Hugging Face simplify the fine-tuning and orchestration of small LLMs.

- Mistral, Phi-2, LLaMA 2 7B, and TinyLlama have become go-to open models for startups.

- Quantization methods like 8-bit or 4-bit inference (via QLoRA) allow deployment on mobile or edge CPUs.

- Multi-agent systems combine small LLMs, each performing specialized tasks, to emulate more powerful systems with greater control.

Small Models, Big Outcomes

The future of LLMs isn’t necessarily “bigger.” It’s smarter, more specific, and more sustainable. In the same way we moved from bloated desktop software to modular SaaS platforms, AI is shifting from all-in-one giants to focused, plug-and-play intelligence.

Small LLMs:

- Perform better in narrow domains

- Are cheaper to train and run

- Are easier to control and govern

- Can be deployed securely, even offline

- Enable innovation in edge computing, mobile apps, and regulated environments

How Brim Labs Helps You Build Smart, Focused LLM Solutions

At Brim Labs, we help businesses move from generic AI to custom-built, efficient, and reliable AI agents. We specialize in:

- Fine-tuning open-source LLMs like Mistral, LLaMA, and Phi

- Building RAG-based systems around internal knowledge bases

- Deploying lightweight models for specific verticals—healthcare, SaaS, compliance, customer support

- Integrating small LLMs into web and mobile applications with full-stack support

If you’re exploring how to adopt GenAI without burning through compute budgets or compromising on performance, we can help.

👉 Let’s build AI that fits your business, not the other way around.

Explore our work at www.brimlabs.ai